|---Module:text|Size:Small---|

Artificial Intelligence isn’t a distant future anymore. It’s already in our daily lives, shaping how we work, communicate and make decisions

From recommendation engines to translation tools, from chatbots to clinical support systems, AI has gone from being an academic buzzword to something we actually use without even noticing.

This big leap didn’t happen overnight. It came from being able to train models with massive amounts of data, AI model’s architecture breakthroughs, plus a huge boost in computing power. Suddenly, concepts like Machine Learning and Natural Language Processing weren’t just research topics anymore, they became the foundation of real products used by millions. But with great power comes… well, a long list of responsibilities.

Today, building and using AI isn’t just about making it work. It’s about making it safe, reliable and transparent. It’s about earning people’s trust and making sure that technology helps, rather than creating new risks.

Security Isn’t a “Nice to Have”

In companies, AI usually shows up in two ways. First, as a tool for developers, helping with code generation, documentation or testing. And sure, this can make life easier. But let’s be honest: the code these tools produce is far from perfect. It can bring in outdated patterns, bugs or vulnerabilities that no one notices at first. That’s why the rule is simple: the human is responsible for the code, not the model.

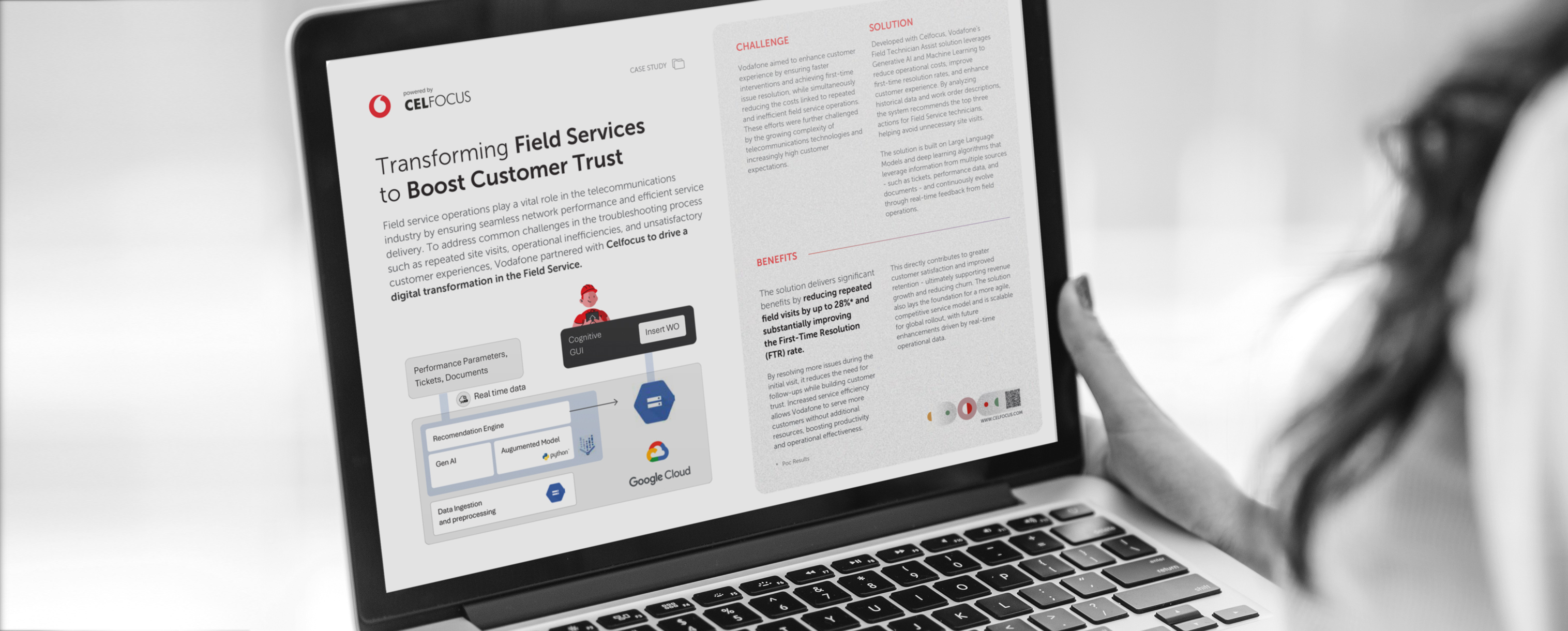

Then there’s the second scenario: AI becomes part of the product itself. It’s the chatbot that talks to customers, the recommendation engine, or the intelligent assistant. This is where things get more serious. Now, the system interacts with people, learns from real data, and makes decisions that can have real impact. That’s also where the attack surface grows. Models can be manipulated, sensitive data can leak, and a “small glitch” can turn into a major incident.

This is why security can’t be something we tack on in the end. It must be part of the design from day one, just like “privacy by design” changed how we think about data protection. We need to bring the same mindset to AI.

Why Securing AI Feels Different

With traditional software, we set the rules, and the software follows them. With AI, things are a bit messier. Models learn, adapt and sometimes surprise us. That unpredictability means we need stronger boundaries, more testing, and a much better understanding of what’s actually happening under the hood.

And this isn’t something one team can solve alone. Security, legal, compliance, product, business – everyone needs a seat at the table. Different perspectives help us catch biases, legal blind spots and unintended consequences before they hit production.

It’s All About the Lifecycle, AI security isn’t a single step. It’s a continuous loop.

At the start, we look at the data: What’s being used? Is it balanced, anonymised, properly handled? This is where we can avoid a lot of problems down the road.

When the model is being trained, we make sure it behaves consistently and fairly, without weird biases or unstable patterns. And we don’t just trust the output, we ask why the model is making those decisions. Before go-live, there’s serious testing. Throw everything at it: malicious prompts, unexpected inputs or attempts to make it leak data. We won’t catch everything, but we can lower the risk a lot.

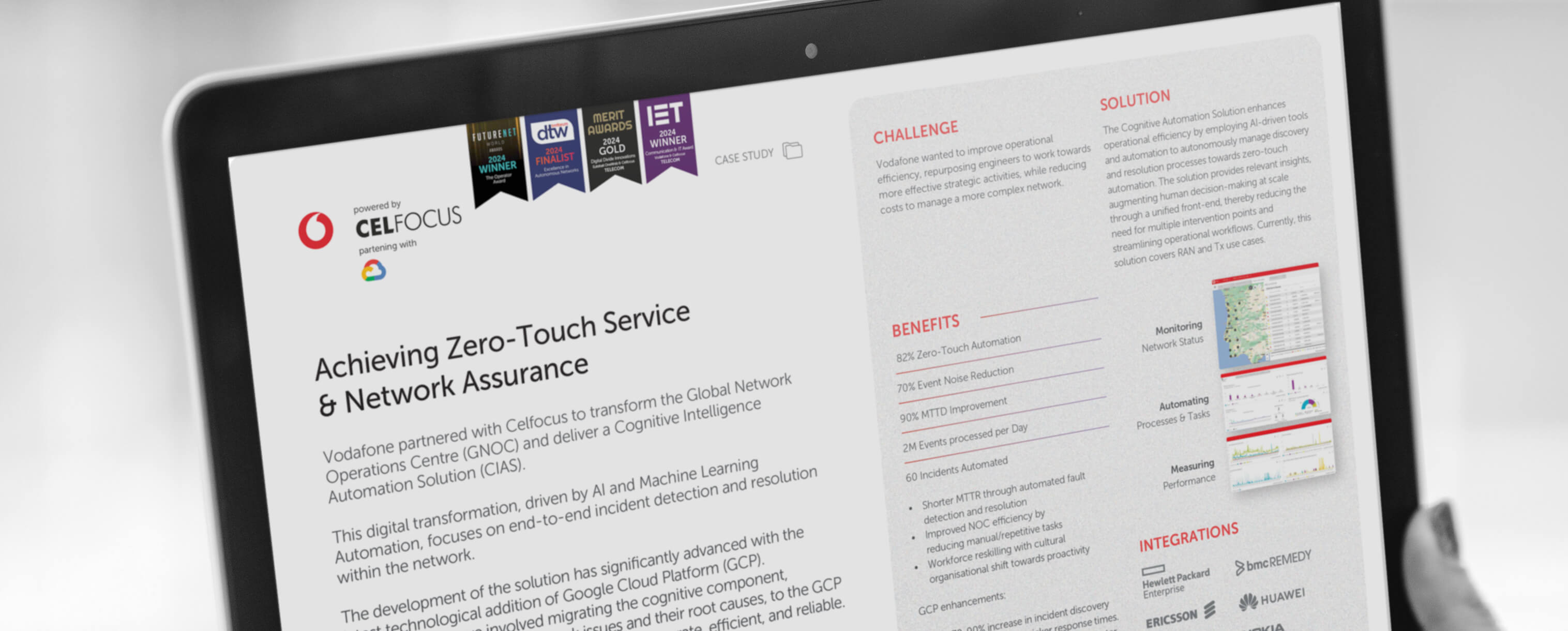

And then, once it’s in production, comes the part many teams forget: continuous monitoring. Reality changes. Users change. Attackers adapt. AI evolves. If we don’t keep an eye on how the system behaves over time, something that was safe yesterday can become a problem tomorrow.

Regulation and Ethics Aren’t Just Legal Boxes to Tick

Security isn’t the whole story. Trust also depends on ethics and transparency.

In Europe, the AI Act will set clear obligations depending on the risk level of the system. High-risk use cases such as healthcare or finance will have to follow much stricter rules. And just like the GDPR changed how we handle personal data, the AI Act will change how we design and document intelligent systems.

But regulation alone won’t make users trust what we build. That comes from being transparent. People don’t trust black boxes. If the system gives an answer, we should be able to explain why. If there are limits, they should be clear. If the model isn’t perfect (and it never is), that needs to be acknowledged. Fairness and accountability aren’t optional extras.

The “Deloitte” Hallucination

One of the clearest examples of why all of this matters happened recently with Deloitte. An AI model confidently invented false references that were listed in an official report – a textbook hallucination. No one hacked it. There was no sophisticated exploitation. The system just made things up… but it did it so convincingly that people believed it.

That single moment created reputational risk and forced the company to publicly correct the record. It’s a good reminder that not every AI failure comes from an attacker. Sometimes, the model itself is the problem.

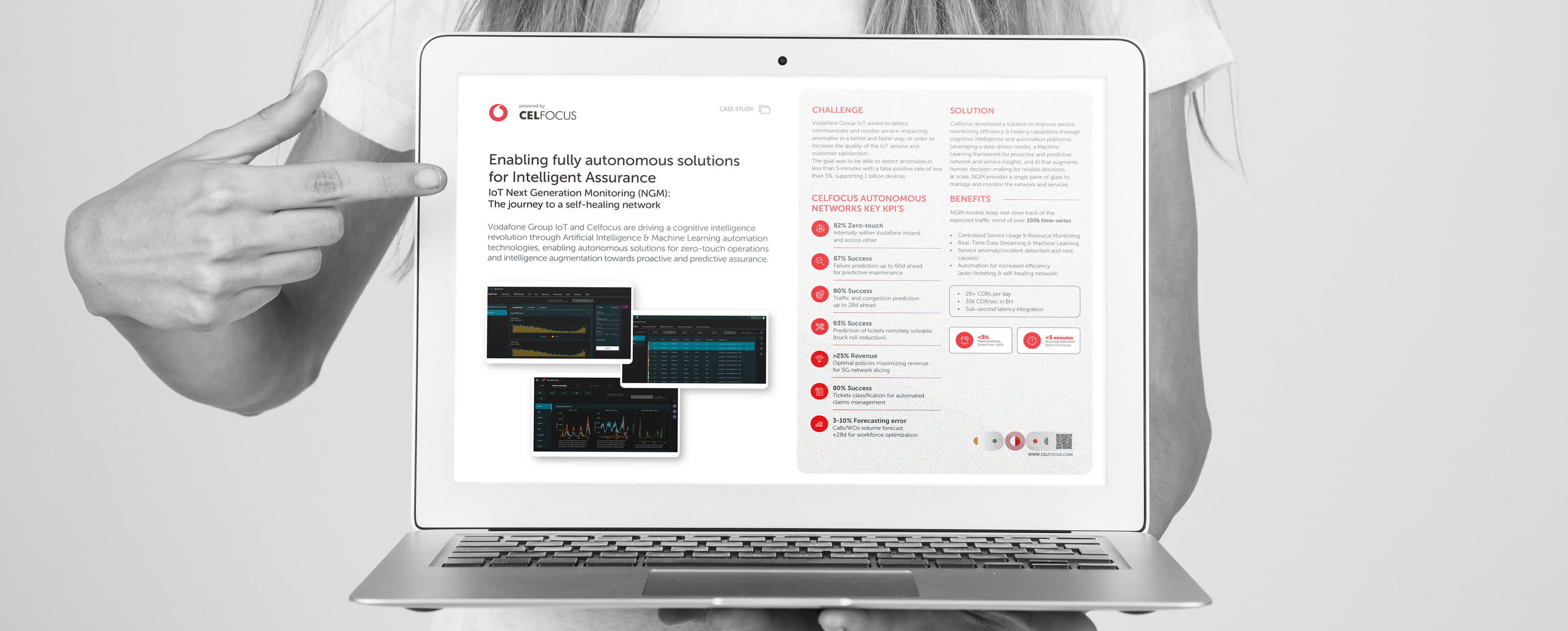

And this isn’t a one-off. In telecom, if a recommendation engine isn’t properly secured, it can leak customer data that fuels phishing campaigns. In Healthcare, biased or manipulated diagnostic systems can put patients at risk. In Finance, weak controls around credit scoring or fraud detection can lead to regulatory trouble and serious trust issues.

Responsible Innovation Starts Early

The way I see it, keeping AI safe and trustworthy isn’t about slowing down innovation. It’s about making innovation sustainable. That means thinking about security from the start, not the end. It means building systems that are explainable, traceable and actually understandable, not mysterious black boxes. It means mixing technical expertise with legal and ethical thinking.

And maybe most importantly, it means keeping a critical mindset. AI is powerful, but it’s not magic. The day we believe we fully control it is the day we lower our guard. Humility is part of security. It keeps us asking questions and double-checking what we think we already know.

Building secure, ethical and transparent AI isn’t just good practice. It’s how we build trust, avoid unnecessary drama down the line, and make sure technology works for people, not the other way around. So, if there’s one takeaway, it’s this: build trust as you build the system. Don’t wait for a headline or a crisis to care about responsibility. That’s how we keep AI useful, secure, and on our side.